Do We Really Need Feature Selection in a Data Analysis Pipeline?

Defining the feature selection problem and identifying its advantages and disadvantages show its value

First published at Towards Data Science, by Pavlos Charonyctakis.

In a typical data analysis or supervised machine learning task, the goal is to construct a predictive model for a variable of interest (also called target or outcome), using a set of predictors (also called features, variables or attributes). The pipeline of this predictive model construction typically consists of several steps, such as feature extraction/construction (e.g., using dimensionality reduction techniques), preprocessing (e.g., missing value imputation, standardization), feature selection, and model training. Any of these steps, except the model training, of course, can be omitted. This article is focusing on why we do need a feature selection step in our data analysis pipeline. To answer this question, we need at first to define what feature selection is. Next, we need to check its advantages and disadvantages so as to conclude with the final verdict.

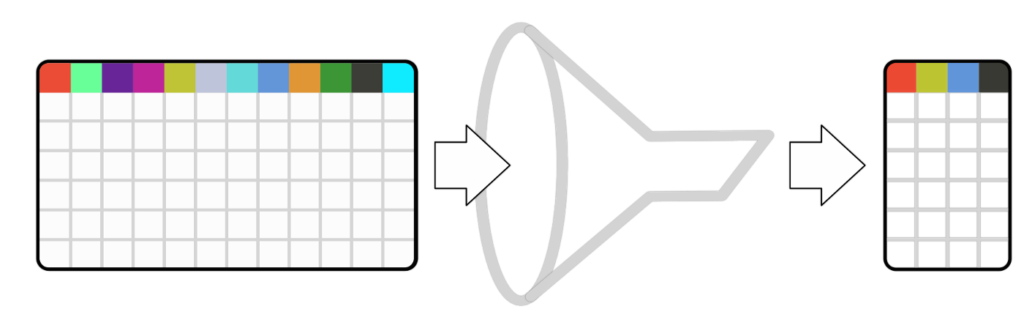

What is feature selection?

In machine learning and statistics, feature selection, also known as variable selection, attribute selection, or variable subset selection, is the process of selecting a subset of relevant features (also called features, variables or attributes) for use in model construction, someone reads in Wikipedia. This is a relatively vague definition that does not make clear the goal of the feature selection. A clearer definition is the following: Feature Selection can be defined as the problem of selecting a minimal-size subset of the variables that collectively (multi-varietally) contain all predictive information necessary to produce an optimally predictive model for a target variable (outcome) of interest.

Thus, the goal of the feature selection task is to filter out the irrelevant or redundant variables given the ones already selected and select only the ones providing collectively unique information for the outcome of interest leading to an optimal predictive model in terms of performance.

Advantages of using feature selection

By selecting only a few features (the most significant ones) and removing the remaining ones from consideration, it is often the case that a better model can be learned, especially in high-dimensional settings. This is counterintuitive, as an ideal machine learning modeling algorithm should be able to perform at least as well as without applying feature selection, as the information provided by the selected features is already contained in the provided data. Indeed, asymptotically (i.e., as the sample size tends to infinity) and given a perfect/ideal learning algorithm (in practice, there is no such algorithm), there is no reason to perform feature selection for a predictive task.

In practice, however, solving the feature selection problem has several advantages. By removing irrelevant and redundant features, the “job” of the modeling algorithm is getting easier and thus often leads to better final models. Another advantage of getting easier the task of the learning algorithm is that its training time becomes shorter and uses fewer resources (e.g., memory). This is because a good selection of features facilitates modeling, particularly for algorithms susceptible to the curse of dimensionality.

Feature selection is a common component in supervised machine learning pipelines and is essential when the goal of the analysis is knowledge discovery. Knowledge discovery is really important in some domains such as molecular biology and life sciences, where a researcher is mainly interested in understanding the underlying mechanism of the problem. Selecting a feature subset leads to simplification of models to make them easier to interpret by researchers/users. Finally, an important aspect of feature selection is the cost optimization that a user can achieve by using a model with fewer features. This is especially important if it is very expensive to measure certain features, and each feature is associated with a cost.

Disadvantages of feature selection

The feature selection problem is NP-hard. There are several approaches to solve the problem exactly (also called the best subset selection problem) only for linear models. Although the results are promising, exact approaches are only able to handle a few hundred or thousand variables at most (so, they are not applicable on high dimensional data).

In order to efficiently solve the feature selection problem, most approaches rely on some kind of approximation. The majority of approximate approaches can be roughly categorized into stepwise methods such as FBED, sparsity-based methods such as LASSO, information-theoretic methods, and causal-based methods. Even though they are approximations and do not solve the exact problem, they are still optimal for a large class of distributions or under certain conditions.

I mentioned before as an advantage that selecting only a feature subset leads to simplified models trained in shorter times. Unfortunately, it is often the case that the task of the feature selection per se is that much slower that diminishes the benefit of the faster model training. However, this can be optimized by caching the selected features and thus applying the feature selection only once.

Another shortcoming of the vast majority of the feature selection methods is that they arbitrarily seek to identify only one solution to the problem. However, in practice, it is often the case that multiple predictive or even information equivalent solutions exist. Especially in domains with inherent redundancy present in the underlying problem. Molecular biology is such a case where often multiple solutions exist.

While a single solution is acceptable for building an optimal predictive model, it is not sufficient when feature selection is applied for knowledge discovery. In fact, it may even be misleading. For example, if several sets of risk factors in a medical study are collectively equally predictive for an event, then it is misleading to return only one of them and claim that the rest are superfluous.

Final verdict

In short, I would recommend yes, we need a feature selection step in our data analysis pipeline. Even if the list of advantages and disadvantages in this article is not exhaustive, I think the benefits of employing feature selection are clearly shown. Especially in cases where the task contains high-dimensional data, it is of priority the knowledge discovery and/or the different features are associated with different costs. In fact, the best we can do (this is our choice in JADBio) is to test configurations both with feature selection and without. This, of course, comes with any resource limitations that we need to take into account, but in any case, there are various tricks we can try. Finally, do not forget that the “winner’s curse” is ever-present to haunt you creating numerous models (Can you trust AutoML?).

Pavlos Charonyktakis is a scientific programmer at JADBio. As a scientific programmer, he developed the Just Add Data tool, a complete statistical analysis pipeline for performing feature selection, identifying and interpreting predictive signatures. He also contributed to the development of the Biosignature Discoverer plug-in for identifying molecular signatures for the CLCbio bioinformatics platform as well as to a highly optimized machine learning and statistics library. He received his B.Sc. in 2011 and his M.Sc. in 2015 from the Computer Science Department of the University of Crete. From 2009 until 2015, he had been a research assistant (with scholarship) at the Institute of Computer Science, Foundation for Research and Technology-Hellas. He has been the author or co-author of 6 journal and peer-reviewed conference articles.