The field of statistical analysis that applies specific methodologies to explore the time it takes for an event to happen is the bare-bones definition of Survival Analysis. The amount of time it takes before a predetermined event takes place is also known as time-to-event analysis. By “survival” in this context we refer to what remains free of a particular outcome over time1.

Survival Analysis in JADBio

via Professor I. Tsamardinos

In typical survival analysis problems, one measures a handful of features (covariates) that may be predictive of time to disease, complication, relapse, metastasis or other events. Different Kaplan – Meier curves are plotted for the strata of patients with high and low values of the covariates. Covariates are tested one-at-a-time for correlation with the survival time to identify whether any of them provide predictive information for it. If the answer is affirmative, then maybe a scientific publication is in order.

Now imagine that you actually measure hundreds of thousands or even millions of features. These can be multi-omics markers, genetic markers, lifestyle factors, clinical variables, etc. It could even be x-rays, histopathological images or other medical images. And you are looking to identify the combination of the values of these markers that lead to the best prediction of survival, filtering out markers that are informative but redundant. For example, identify that a particular combination of a gene expression, a SNP polymorphism, and a feature in the X-ray that stratifies patients to high risk. You are looking for the optimal survival prediction model whether parametric, non-parametric, linear or non-linear. There is another problem. You know nothing of AI or Machine Learning and you can’t even code. You just want to solve the problem, not looking to get a Ph.D. in statistics today. Oh, and you are late on reporting the results so it would be nice if you can do this analysis in the next 30 minutes. Is this even possible?

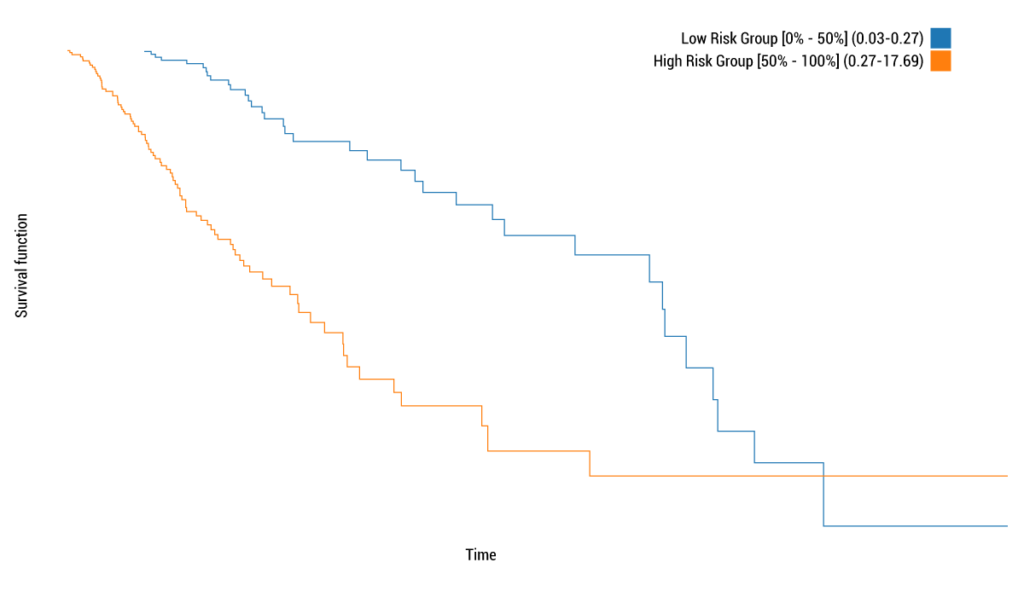

Yes, it is. JADBio does offer survival analysis in a fully automatic way. No math, no coding is required. The analysis is fully automated: you just add data. JADBio performs feature selection with hundreds of thousands of markers and image features,which filters out not only the irrelevant ones, but also the redundant markers. Take for example the problem of predicting the survival time after surgery of low-grade glioma patients from miRNA profiles measured in the tumor biopsies. To solve the problem, data are provided by the The Cancer Genome Atlas initiative and included in the TCGA public project of case studies. The data contain profiles on 501 patients measuring 619 miRNA’s, the follow up time, and whether the patient is diseased or not (censored outcome) at the end of the following up period. The analysis results are shared in the following link: https://app.jadbio.com/share/d9bfbf09-f445-405b-b792-9c71b0654fee. The analysis takes about 2 hours and 20 minutes in which time JADBio tries 101 machine learning pipelines (configurations) and trains 3030 predictive models. The winning model has a predictive power of about 0.80 as measured by the concordance index. The interpretation is that given any two patients and their miRNA profiles, the model will predict correctly who is going to die first with probability of 80%. Out of the 619 miRNA, JADBio discovers that only a subset of 12 are actually necessary for the prediction. In fact, it discovers 3 sets of such 12 miRNAs leading to models with statistically indistinguishable predictive power. This provides choices to diagnostic assay designers and a more complete picture to the drug designer for choosing drug targets. Based on the predictions of the model, JADBio automatically stratifies patients to high risk and low risk, depending on whether they rank in the top 50% of risk score or not. The following pictures shows the Kaplan-Meier curves of the two groups (the patient scores are out-of-sample scores, meaning they are the scores produced by a model not trained on these patients):

As with every analysis by JADBio, confidence intervals of performance are provided, theoretical guarantees of correct estimations, visuals to interpret the role of the features in the prediction and their added value, model calculator to run what-if scenario, and much more useful information. But, it is time for me to do another analysis…

Survival analysis originated from the medical and biological fields of study, which use it as the tool to observe the rates of death, organ failure, and the onset of various diseases2. Therein lies the reason many attribute the association of survival analysis with negative events. This tool, however, can and is applied equally to the study of positive events, in one case to determine how long it may take someone to win the lottery if they play it every week.

In health disciplines, survival analysis represents the predictive model which calculates for the time lapsed until a certain event. Obviously, in medicine the event is often, albeit not always, maybe the death of a subject investigated. Healthcare research fields and experts apply survival analysis to create models observing a diverse variety of ‘events’ such as, but not limited to, a patients’ average lifespan from cradle to grave, or the time oncologists determine it takes after cancer treatments to potentially lead to a patient’s death.

Cardiologists and relevant scientists in the field may determine the time it takes for a patient to have a second heart attack after having experienced their first, accurately predict the progress of atrial fibrillation, as well as estimate the time it takes from the manufacture of a medical device component, say a heart valve, to said components’ failure to function as expected. Survival analysis has perhaps been especially critical in helping pinpoint the time it takes for an HIV diagnosis to develop into AIDS, something that has largely contributed to creating precise treatments which were recently announced in the first months of 2021 to render HIV/AIDS untraceable and therefore non-transferable.

The competing risks issue is one in which there are several possible outcome events of interest. For example, a prospective study may be conducted to assess risk factors for time to incident cardiovascular disease. Cardiovascular disease includes myocardial infarction, coronary heart disease, coronary insufficiency, and many other conditions. The investigator measures whether each of the component outcomes occurs during the study observation period as well as the time to each distinct event. The goal of the analysis is to determine the risk factors for each specific outcome and the outcomes are correlated.

Survival analysis is essentially a model for survival performed to analyze time-to-event historical data, to produce estimates that demonstrate the chance of said event being observed happening or the quantity changes that may affect the event over a specific period. As time moves on, often specific events in life situations are more or less likely to happen.

Using survival models to predict outcomes provides a powerful aid to health and medical professionals so they can make real-time precise decisions3. Survival analysis also helps them to form exponentially more accurate predictions, than being forced to ‘guess’ either based on previous experience or dry and stale statistical percentage metrics. Survival analysis takes into account how different variables, referred to as independent predictor variables, or covariates, impact the expected time an event is to occur4.

Although the application of survival analysis was initially applied to biomedical sciences to help experts analyze the time to death of either patient or in research laboratory animals, the science is beginning to widen its scope to include other areas of research and other diverse fields of study.

Several major insurance companies use survival analysis to predict when someone they have insured will die, when and if one of their insured parties will cancel or renew their policy, and how long it will take to file and resolve a claim. Survival analysis results help insurance providers calculate insurance premiums, perform risk assessments and confirm the exact lifetime value of their clients.

Applying Survival Analysis

Various modeling approaches may be applied depending on what the objective of the time-to-event analysis is5. Let us start with the definition of covariates, which are in essence those characteristics (not examining the actual treatment) that describe the subjects in any given experiment. For example, a young man experiences a heart attack, without examining this main aspect, we analyze all other potential factors that may include the patients’ lifestyle, diet, job, the area where they live, or any family history of heart disease.

More examples:

• Tumor recurrence time

• Intervention treatment leading to cardiovascular death

Censoring

Censoring6 is what we call the instance when we have some information about a subject’s event time, but we don’t know the exact event time. For survival analysis methods to be valid, the censoring mechanism has to be independent of the survival mechanism.

Among the greatest challenges faced in applying Survival Analysis is that a certain subset of subjects will not experience the event being studied in the determined time frame observed. It is, therefore, that their survival times remain unknown to the researcher. Of course, in certain cases wherein a research subject presents with a different event, the determination is impossible to follow-up. For example, after a few years, some patients leave their job (due to debilitating illness) to help reinforce the potency of their treatment.

Incorporating the censored data in calculations is an essential aspect as it balances any bias in the outcoming predictions.

There are generally three reasons we see censoring:

The patient does not experience the event we are studying before the study ends

The patient is lost during the follow-up phase of the study

A patient withdraws from the study

Types of censoring

Right Censoring: If the event occurs beyond the prespecified time, the data is considered right-censored. This is by far the most common type of censoring

Left Censoring: It occurs when a subject is known to have had the event before the beginning of the observation, yet the exact time of the event is obscure.

Interval Censoring: It occurs when the event is observed within the prespecified time, but we do not know when exactly the event happened.

Assumptions in Censoring

Before we discuss the mentioned topic, it is required to discuss the two key factors, Informative and Non-Informative censoring7.

Informative censoring occurs when the subjects are lost due to the reasons related to the study.

Non-Informative censoring occurs when the subjects are lost due to reasons unrelated to the study. For example, some subjects after a few years opt-out of buying their car, even though they can afford it.

The importance of adding the covariates in survival analysis means that we increase how accurate any of our predictions will be8.

Non-parametric models

These do not call for drawing on assumptions on the shape of the hazard or survival. Such tests may examine if the survival between subpopulations is different. This approach is limited, however, in that only covariates in the category can be measured and that we can not evaluate in what way the survival is affected by a covariate.

Semi-parametric models (Cox models)

In this case, we begin by the assumption that a hazard can be documented as a baseline hazard, which depends solely on time, multiplied by an element that depends only on covariates and not time. In this hypothesis, called proportional covariant effect, we may only analyze the effect of covariates both categorical and continuous using parameters and an undetermined baseline.

Parametric models

In this case, we are required to be specific to the hazard function in the models. In case a good model can be found, these statistical tests are far more powerful than those for semi-parametric models. In addition, we have no restrictions on how covariants may affect the hazard. These models are often used for their ease to reach accurate predictions.

Basics Principles

As we stated in the very beginning Survival Analysis is the application of a set of statistical approaches we use to investigate the time it takes for an ‘event’ of interest to happen. Survival analysis is leveraged in cancer studies to analyze patients’ literal survival time. Typical research questions in cancer and oncology research studies that apply survival analysis include9:

Does the patient’s survival depend on the impact of certain clinical characteristics?

How probable is it for a patient to survive 3 years?

Do different groups of patients present with different survival times?

When considering cancer studies, survival analyses most often apply these methods:

Kaplan-Meier models to help illustrate survival curves

The Log-rank test is used to compare the survival curves of two or more groups of research

The Cox proportional hazards regression is applied to describe how the effect of several variables presents on survival.

The definition of fundamental terms of survival analysis include:

Survival time and event

Censoring

Survival function and hazard function

Survival time and type of events in cancer studies

Different types of events in health and medicine include:

Relapse

Progression

Death

Survival time (or time to event): when a patient begins ‘to respond to treatment’ until we observe that the event of interest (complete remission) occurs.

Cancer and Survival Analysis

In cancer studies, the two most crucial points of measure are the time to death, and the relapse-free survival time, which is the time that coincides with the time from the response a patient presents to a specified treatment and recurrence of the specific disease being analyzed. This is also named the ‘disease-free survival time’ and ‘event-free survival time’.

Various queries of specific interest in cancer studies performing survival analysis include:

– How probable is a patient participating to survive five years?

– Do different groups present with different survival? (patients receiving a new vs standard medical protocol vs placebo in a clinical trial)

– Do behavioral, personal, or clinical characteristics affect patients’ percentage of survival?

Survival analysis is increasingly being adopted in biotechnology, with other industries giving it a go in economics, marketing, machine maintenance, and engineering10.

Time to event data, or survival data, are often analyzed in studies of significant medical and public health issues. Due to the highly unique elements of survival data, specifically the presence of censoring, specific statistical procedures are applied to analyze these data. When considering survival analysis applications, it is mostly of interest to calculate the survival function or survival probabilities over a certain period. Among the various techniques available the two most often used are non-parametric named the life table or actuarial table approach and the Kaplan-Meier method of constructing cohort life tables, or follow-up life tables. Both approaches determine the estimated survival function which may be applied to calculate the probability that a participant survives to a specific time (e.g., 5 or 10 years).

Pro’s and Con’s of Survival Analysis

Other more common statistical methods may shed some light on how long it could take something to happen. For example, regression analysis, which is commonly used to determine how specific factors such as the price of a commodity or interest rates influence the price movement of an asset, might help predict survival times and is a straightforward calculation.

The problem is that linear regression often makes use of both positive and negative numbers, whereas survival analysis deals with time, which is strictly positive. More importantly, linear regression is not able to account for censoring, meaning survival data that is not complete for various reasons. This is especially true of right-censoring, or the subject that has not yet experienced the expected event during the studied period.

The main benefit of survival analysis is that it can better tackle the issue of censoring as its main variable, other than time, addresses whether the expected event happened or not. For this reason, it is perhaps the technique best suited to answering time-to-event questions in multiple industries and disciplines.

Time to event data, or survival data, are frequently measured in studies of important medical and public health issues. Because of the unique features of survival data, most specifically the presence of censoring, special statistical procedures are necessary to analyze these data. In survival analysis applications, it is often of interest to estimate the survival function or survival probabilities over time11.

There are several techniques available; we highlighted here two popular nonparametric techniques called the life table or actuarial table approach and the Kaplan-Meier approach to constructing cohort life tables or follow-up life tables. Both approaches generate estimates of the survival function which can be used to estimate the probability that a participant survives to a specific time (e.g., 5 or 10 years).

Resources:

- webfocusinfocenter.informationbuilders.com/wfappent/TLs/TL_rstat/source/Survival45.htm

- sciencedirect.com/topics/medicine-and-dentistry/survival-analysis

- sciencedirect.com/science/article/pii/S1756231716300639

- statisticshowto.com/survival-analysis/

- academic.oup.com/bioinformatics/article/26/15/1887/188146

- sphweb.bumc.bu.edu/otlt/mph-modules/bs/bs704_survival/BS704_Survival_print.html

- mygreatlearning.com/blog/introduction-to-survival-analysis

- stat.columbia.edu/~madigan/W2025/notes/survival.pdf

- academic.oup.com/bioinformatics/article/26/15/1887/188146

- sthda.com/english/wiki/survival-analysis-basics

- investopedia.com/terms/s/survival-analysis.asp